- #Webscraper scrapy example github how to#

- #Webscraper scrapy example github install#

- #Webscraper scrapy example github code#

Scrapy provides comprehensive information about the crawl, as you go through the logs, you can understand what’s happening in the spider. You can use this function to parse the response, extract the scraped data, and find new URLs to follow by creating new requests ( Request) from them. parse function get invoked after each start_url is crawled.

#Webscraper scrapy example github how to#

The Spider class knows how to follow links and extract data from web pages but it doesn’t know where to look or what data to extract. The class AlibabaCrawler inherits the base class scrapy.Spider.

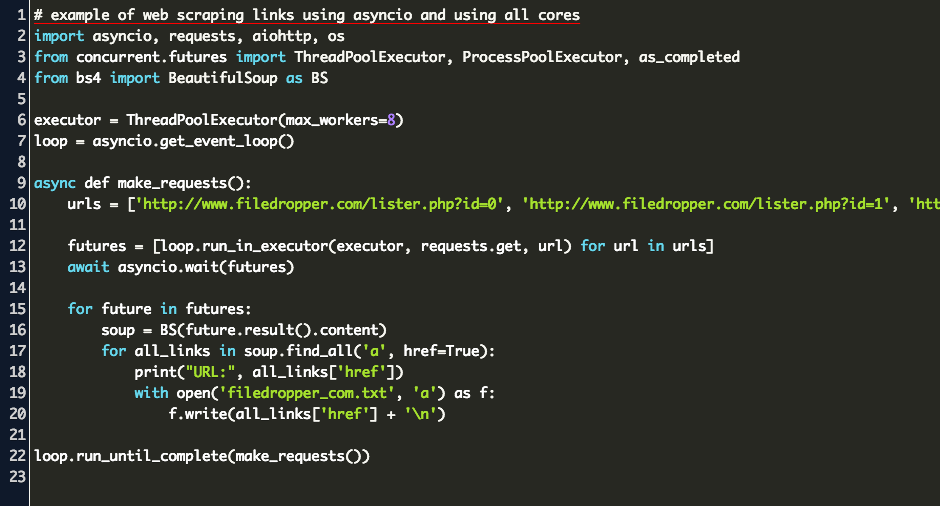

#Webscraper scrapy example github code#

The code should look like this # -*- coding: utf-8 -*-Ĭlass AlibabaCrawlerSpider(scrapy.Spider): Let’s generate our spider scrapy genspider alibaba_crawler Īnd this will create a spiders/scrapy_alibaba.py file for you with the initial template to crawl. Scrapy has a built-in command called genspider to generate the basic spider template. Spiders/ # All the spider code goes into this directory Items.py # Describes the definition of each item that we’re scraping Scrapy_alibaba/ # Project's python module Scrapy.cfg # Contains the configuration information to deploy the spider It will contain all necessary files with proper structure and basic doc strings for each file, with a structure similar to scrapy_alibaba/ # Project root directory This command creates a Scrapy project with the Project Name (scrapy_alibaba) as the folder name.

Let’s create a scrapy project using the following command. You can find more details on installation here – Create a Scrapy Project

#Webscraper scrapy example github install#

Install Packages pip3 install scrapy selectorlib įollow the guides below to install Python 3 and pip: To start, you need a computer with Python 3 and PIP. In this tutorial, we will show you how to scrape product data from – the world’s leading marketplace. Scrapy is best suited for web crawlers which scrapes data from multiple types of pages. Written in Python, it has most of the modules you would need to efficiently extract, process, and store data from websites in pretty much any structured data format. Scrapy is the most popular open source web scraping framework.

0 kommentar(er)

0 kommentar(er)